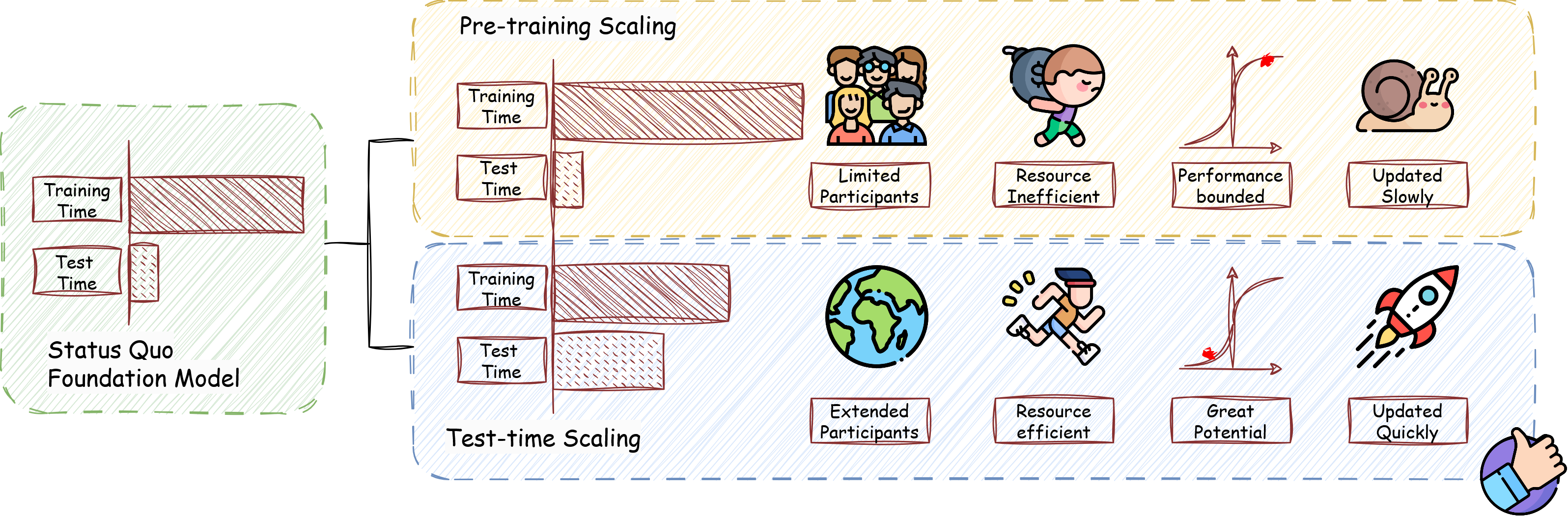

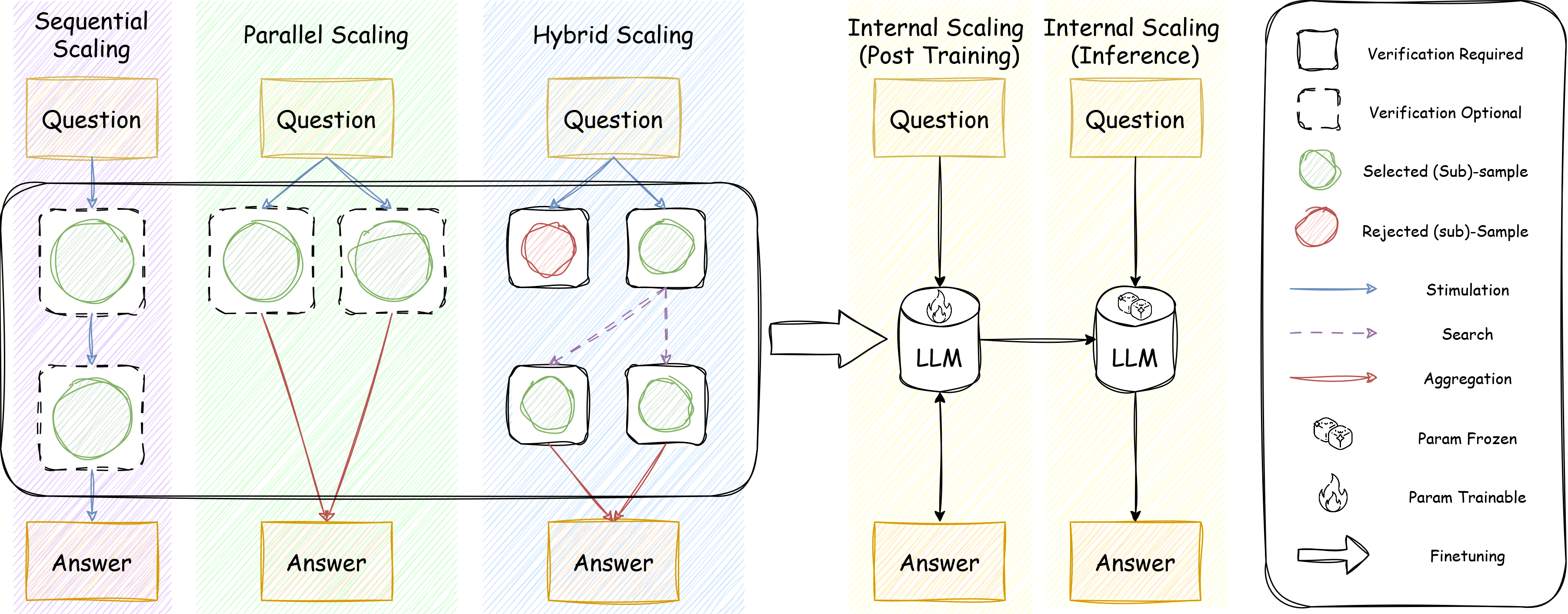

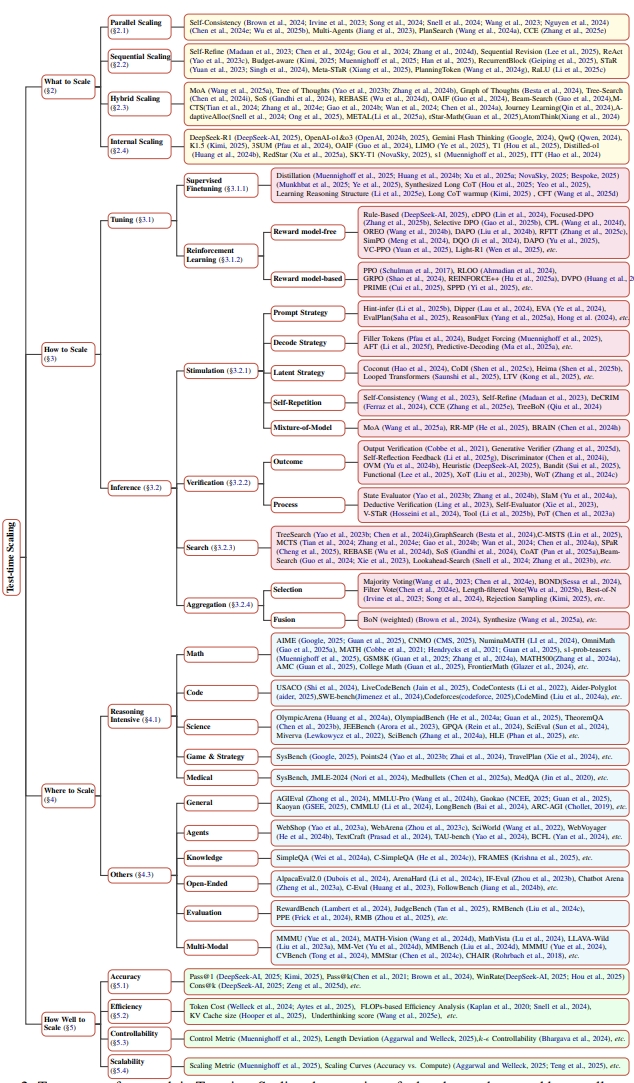

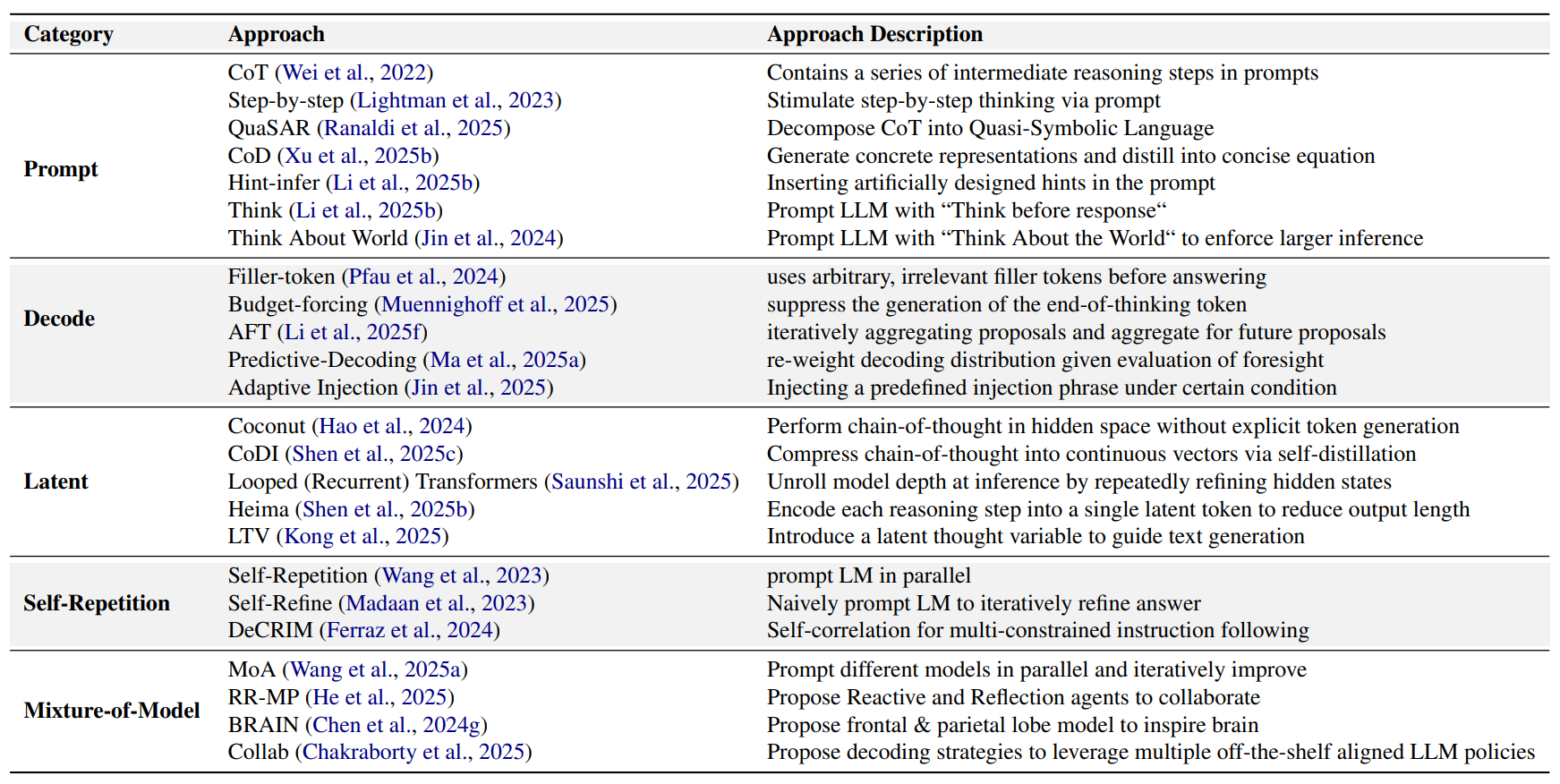

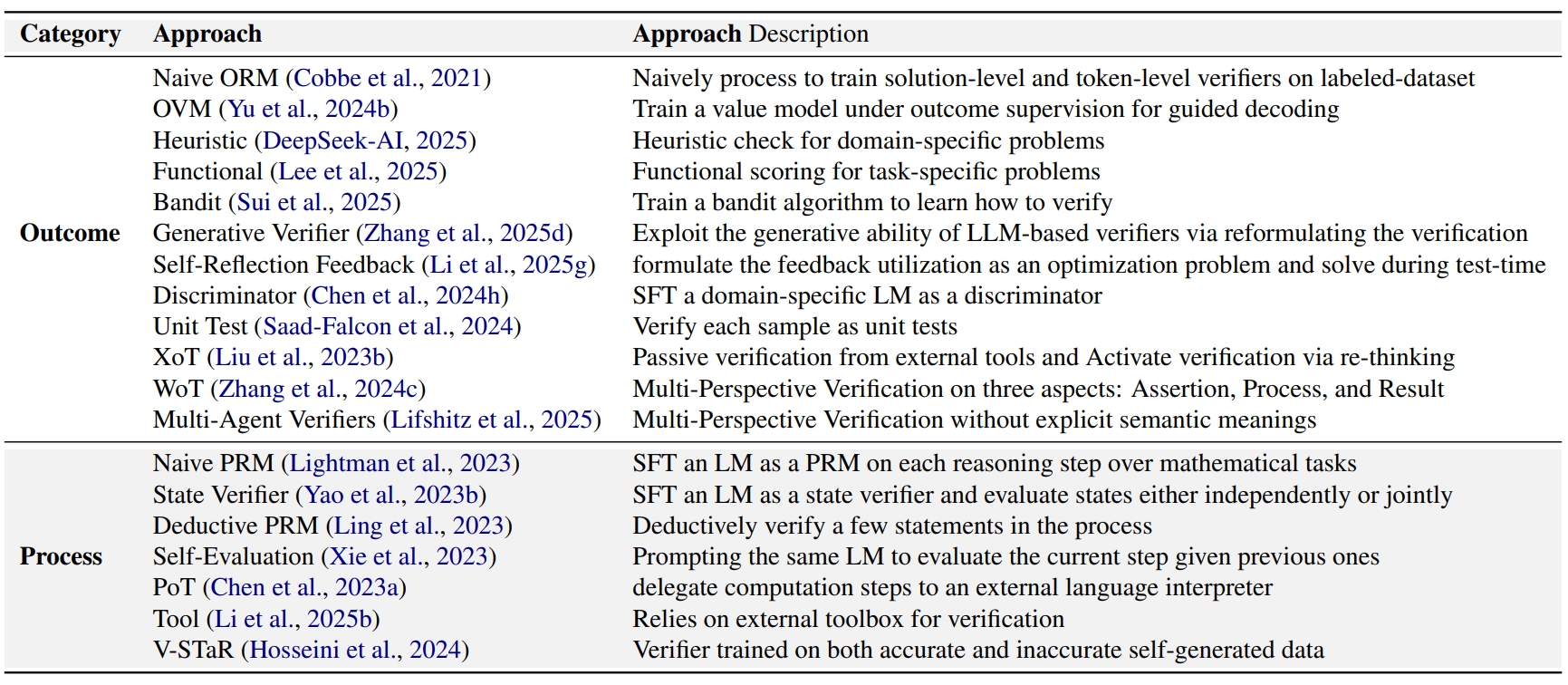

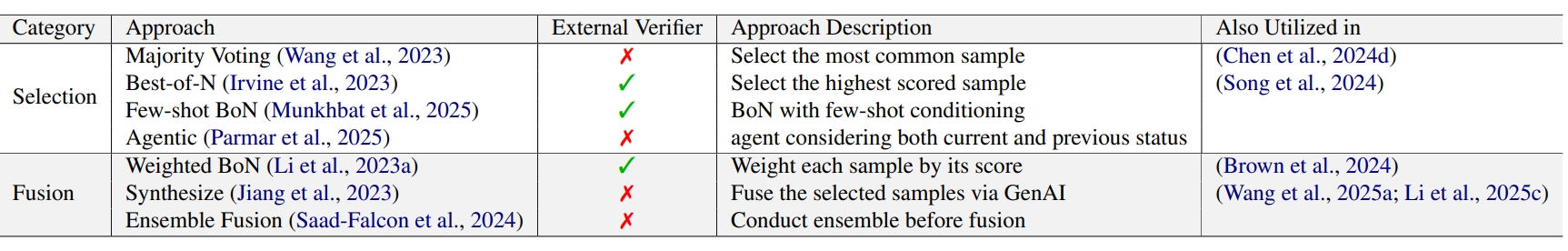

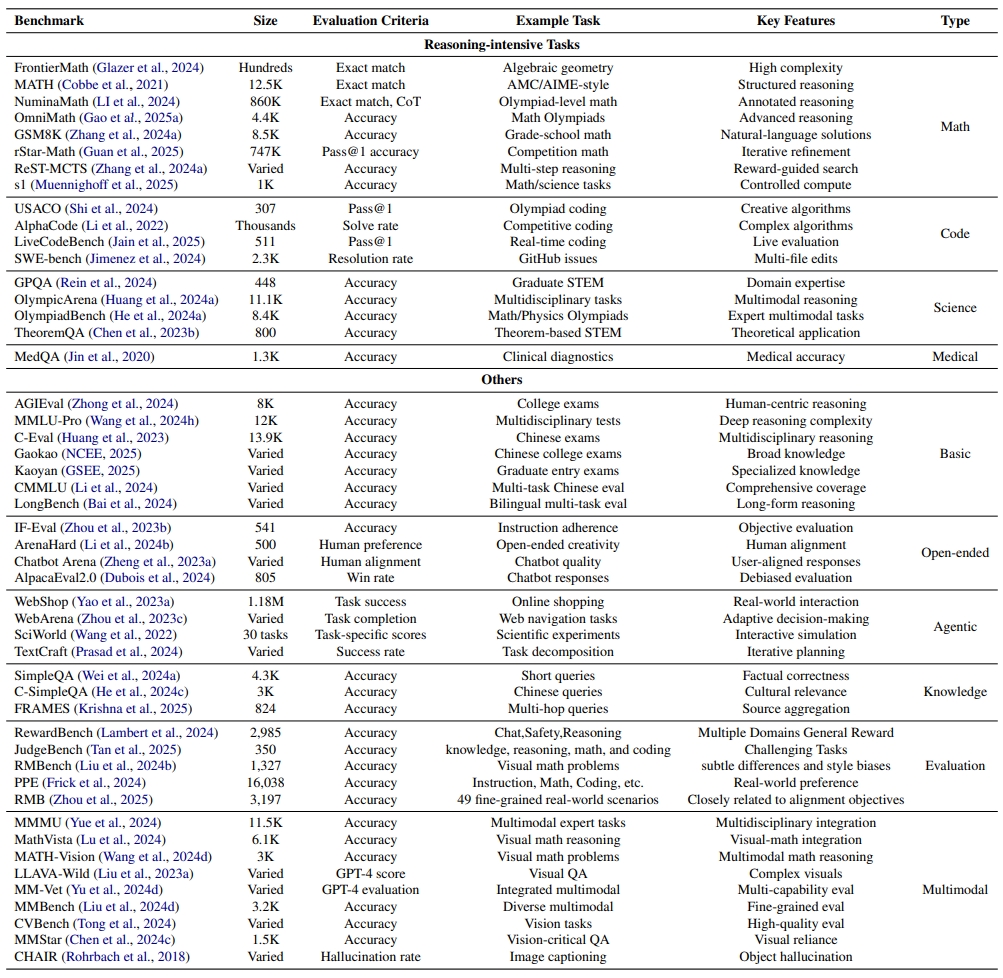

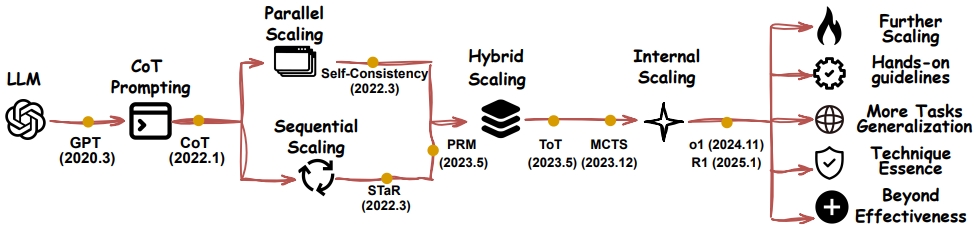

As enthusiasm for scaling computation (data and parameters) in the pretraining era gradually diminished, test-time scaling (TTS), also referred to as ``test-time computing'' has emerged as a prominent research focus. Recent studies demonstrate that TTS can further elicit the problem-solving capabilities of large language models (LLMs), enabling significant breakthroughs not only in specialized reasoning tasks, such as mathematics and coding, but also in general tasks like open-ended Q&A. However, despite the explosion of recent efforts in this area, there remains an urgent need for a comprehensive survey offering a systemic understanding. To fill this gap, we propose a unified, multidimensional framework structured along four core dimensions of TTS research: what to scale, how to scale, where to scale, and how well to scale. Building upon this taxonomy, we conduct an extensive review of methods, application scenarios, and assessment aspects, and present an organized decomposition that highlights the unique functional roles of individual techniques within the broader TTS landscape. From this analysis, we distill the major developmental trajectories of TTS to date and offer hands-on guidelines for practical deployment. Furthermore, we identify several open challenges and offer insights into promising future directions, including further scaling, clarifying the functional essence of techniques, generalizing to more tasks, and more attributions.

“What to scale” refers to the specific form of TTS that is expanded or adjusted to enhance an LLM’s performance during inference.

Test-time Scaling Paper Summary

We understand that an individual's strength is limited. I hope our survey provides an open and practical platform where everyone can share their experiences in TTS practice within the community we are building. These experiences are invaluable and will benefit everyone. If the guidelines you provide are valuable, we will include them in the PDF version of the paper.

@misc{zhang2025whathowwherewell,

title={What, How, Where, and How Well? A Survey on Test-Time Scaling in Large Language Models},

author={Qiyuan Zhang and Fuyuan Lyu and Zexu Sun and Lei Wang and Weixu Zhang and Zhihan Guo and Yufei Wang and Niklas Muennighoff and Irwin King and Xue Liu and Chen Ma},

year={2025},

eprint={2503.24235},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2503.24235},

}

Comments & Discussion